This post originally appeared in the February 20, 2020 issue with the email subject line "Somewhere along the continuum between Twitter and Shakespeare" and a review of content research software MarketMuse.

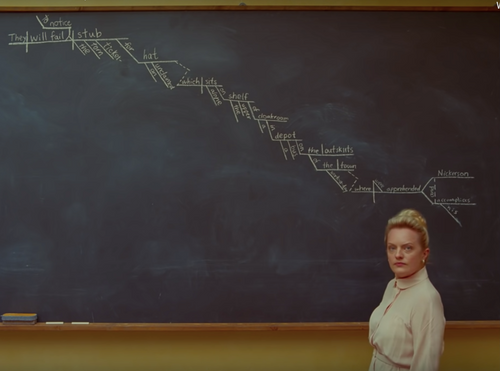

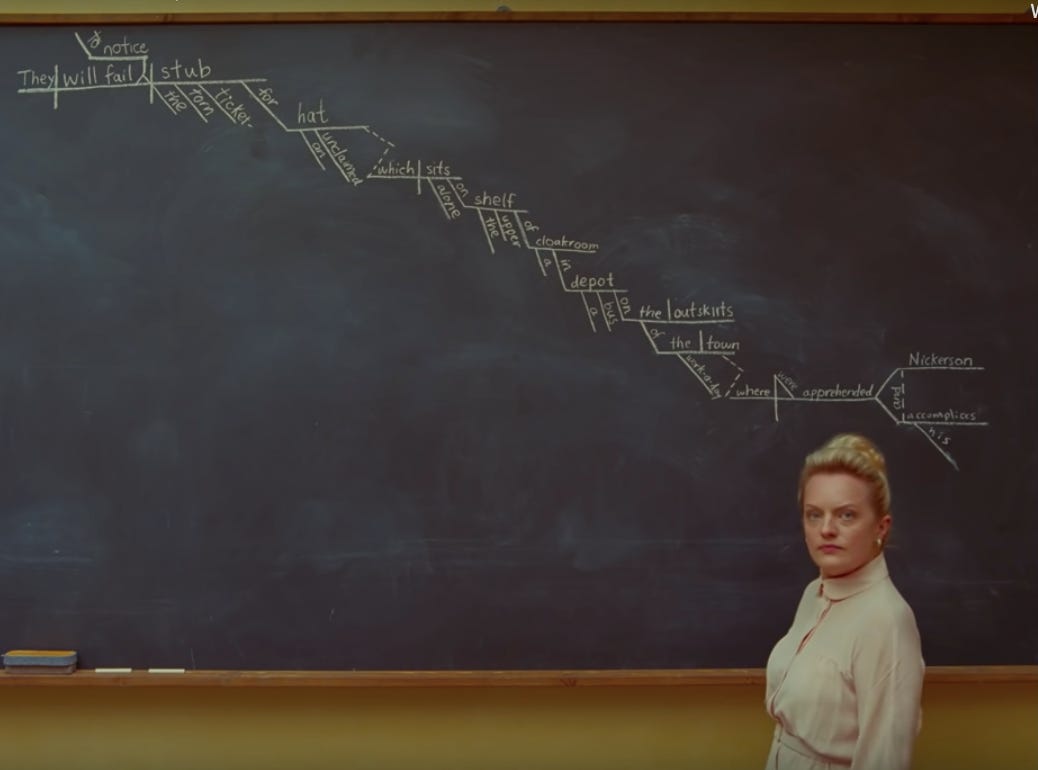

From grade school through college I found relief in math applied to language: diagramming sentences or scanning poetry. My editorial sensibility loves a Hemingway-esque reduction of sentences. I love striking a proverbial red pen through unnecessary verbosity: “in order to” and “just” and every unnecessary adverb or prepositional phrase. Reduce the sentence. Give meaning through word choice rather than wordiness. One of my favorite Nick Cave lyrics is:

Prolix, prolix: nothing a pair of scissors can’t fix.

Language processing formulas behave similarly: they break complex text down into component parts and assign meaning, identifying the statistical likelihood that one word or another will occur in relation to another. This process assumes that connotation and tone do not exist, or as stated in the documentation of the Word2Vec algorithm:

Words are simply discrete states like the other data mentioned above

We know that words are not numbers, but language processing formulas treat them as such, looking at how often certain words appear in conjunction with others. Here are some common language processing formulas:

Flesch–Kincaid/Flesch Reading Ease

You’re most likely familiar with these old friends of language formulas, which have been included in Microsoft Word since the beginning of time.

Flesch–Kincaid scores were developed in the 70s and are widely used throughout software and incorporated into public policy as a measure of “readability,” for better or worse. The formula assigns lower grade levels to text with words that have fewer syllables and sentences that have fewer words. Yes, the formula is almost more complex than that, but… not really?

Advantages: Super common! Intro-level language algorithms! Helps steer teams toward plain language and away from jargon.

Disadvantages: Does a word’s syllabic content actually reflect its complexity? What happens when there are multiple meanings squirreled away in one-syllable words?How much has written language changed in 45 years? Should we update this formula?*

*Many thanks to a former client of mine who created an entire deck explaining why Flesch–Kincaid scores were detrimental to that company’s content strategy.

TF-IDF

Also originating in the 1970s (at least according to Wikipedia), TF-IDF is a common statistical formula used in search engine ranking and document search. It identifies the number of time a word is used in a document or webpage (term frequency, or TF), compared to other documents in the same dataset/search query (inverse document frequency, or IDF).

So, for example, the term “icing” would often be in close proximity to “cake” in content about cooking or cake decorating. However, “The” is a common word everywhere, so even though “the” might be in an article about cake decorating, it’s not considered unique to the topic.

TF-IDF identifies which words are unique to a topic or entity as compared to other topics.

Advantages: Eliminates unnecessary garbage words that mean nothing. Highlights words that are unique or common to a particular topics.

Disadvantages: Discourages new or unique ways of talking about a specific topic. Also, encourages industry-wide jargon (as evidenced by the amount of content marketing blogs that drop a lot of words like “authenticity” but never describe what qualities actually comprise “authentic”)! Also, TF-IDF encourages lists of vocabulary words (like a word cloud) without actually parsing meaning.

Learn to use tf-idf and other language processing tools to build better content.

Take the More than (key)Words course to understand what natural language processing algorithms see in content.

Take the courseWord2Vec

Words used in close proximity to each other can give a strong sense of direct, relational context but not necessarily implied or indirect context — aka, all the context that comes with actually learning about a thing.

For example, the word “prince” has different context depending on whether you’re pairing it with “royalty” or “Meghan Markle” or “Purple Rain.” But here’s some context you’re probably not going to find in any article about any prince: a prince is most often a person with inherent high status.

Here’s where the whole thing gets a little over my head, so if this explanation is wholly off, give a shout.

Word vectors assign a unique numerical value to each word that contains some sort of context and implied meaning. That meaning is derived from proximity (context like TF-IDF) or the likelihood that a word will be followed by another specific word — such as the predictive text on your phone. Word vectors of “prince” will understand the inherent high status, even without pairing the word with “royalty.”

Here’s an explanation of Word2vec, a neural net patented by Google in 2013 that “vectorizes” words:

Given enough data, usage and contexts, Word2vec can make highly accurate guesses about a word’s meaning based on past appearances. Those guesses can be used to establish a word’s association with other words (e.g. “man” is to “boy” what “woman” is to “girl”), or cluster documents and classify them by topic. Those clusters can form the basis of search, sentiment analysis and recommendations in such diverse fields as scientific research, legal discovery, e-commerce and customer relationship management.

If you’re me, you read that example and scream, “Gender is a social construction so relating ‘man’ to ‘boy’ is immensely problematic!!!” But aside from that massive red flag in the example, Word2vec can help parse the large dataset known as “the internet” so search engines work better. Google got a lot better at delivering nuanced search in 2013, so I’m assuming we can thank Word2vec.

Advantages: Word2vec and other vectorization techniques identify similarity among sets of words, and it’s generally very effective at doing so.

Disadvantages: There are many, but we’ll start here: Word2vec only understands text in relation to other published text, so new vocabularies are hard to establish. Brand new words that are covered by the news are more easily defined; words that slip into conversation on Twitter less so. And then there’s vernacular, dominant culture, discourse, dialect, etc. etc.

![Demonstration of how a drawing in a machine learning algorithm can generate words [gif]](https://cdn.substack.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F169346c5-43b7-4b98-a6b2-f3f7af37eda3_480x270.gif)

Why it’s helpful to understand text processing systems

Computers identify mathematical patterns in datasets to make sense of information, in the same way that people look for patterns in human behavior to make sense of people. But it’s important to clarify the differences between human and machine understanding of text.

The biggest difference: machines only see the text that they are given. Text data is largely websites — used primarily for news, marketing and description — or giant corpuses of text like public domain literature (from before 1928) or the complete works of Shakespeare. Marketing copy, current news… or Shakespeare. There’s a massive continuum in between those points that machines will miss.

Computers also do not recognize the mundane; we don’t share the unexceptional, so everyday conversations and general connotation fail to register. Twitter’s digital conversation remedies some of that, but Twitter has its own language — and a computer can never really take a subtweet in context, because the whole point of a subtweet or a snide comment is to only be heard by a select few.

Voice search and the ubiquitous, vampiric Alexa also provides. But again — we don’t write the way we talk, the way we talk at home is different from how we talk when we’re out or with friends. Only some homes are comfortable with Alexa’s always-on ears. What I’m saying is: Computers only understand some of the context. People see and process everything, whether we notice it or not.

Understanding the context of how machines do/do not process context is critical for a livable creative future.

As with last week, this entire newsletter’s understanding of machine learning and AI was greatly informed by Janelle Shane’s You Look Like a Thing and I Love You. It’s awesome!

Hand-picked related content