A version of this post originally appeared in the June 25, 2020 issue with the email subject line "Let me be cautious about Googling that for you" and a review of AI transcription software Otter.ai.

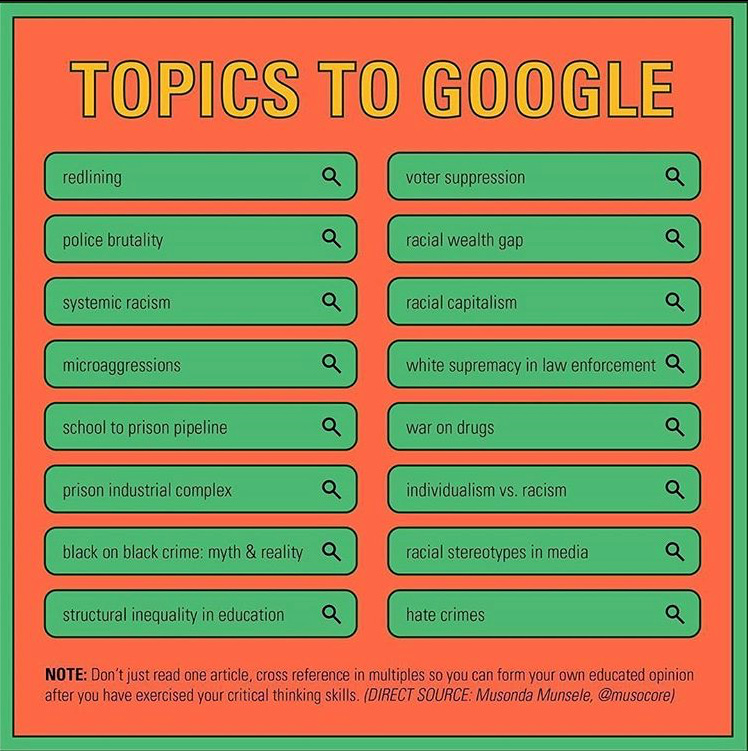

We are educating ourselves on racism and anti-racism and that is a good thing, but there are vast gaps in knowledge among people of all races, especially white people. So we buy books and compile compile lists of links. So many lists of so many links, in Google Docs, in Linktrees.

But as we’re trying to raise the common denominator of consciousness, we are also asking people to reassess historically racist concepts with everyone’s favorite information power broker: Google.

In frustration we say, “Just Google it!” to family or friends who don’t get the basics. We often say the phrase without Googling the complex historical term ourselves, which means we’re putting a lot of trust in for-profit megacorporation with the hubris to say their mission is “to organize the world's information and make it universally accessible and useful” while quietly removing internal diversity initiatives in the months leading up to George Floyd’s murder.

I’ve written before about this problem with authority at Google, but it deserves a deeper dive.

Google’s organic search algorithms are a technological marvel, and it says a lot about the tool’s perceived objectivity that even activists are still generally comfortable with saying “Just Google it!”

Racism in search is harder to find than it used to be, but still very much there

Plenty of problematic content finds its way to the top of search results — as described by Safiya Umoja Noble in her excellent book Algorithms of Oppression — but the organic search engine doesn’t get the same public scrutiny as YouTube, Twitter and Facebook for promoting misinformation.

Google organic search results don’t encourage the spread of misinformation in the same way social algorithms do, but they’re still algorithmically generated content that repeat the often racist dataset they’re scanning. (Technically they could repeat a radical antiracist dataset as well, but I venture to guess that there are far fewer well-optimized antiracist websites.) Google’s problems more to do with the deep-rooted problems in the media and communications industry and less to do with Russian bot manipulators.

Since the publication of Noble’s book in 2018, Google has updated many times to lessen, but not eliminate, how algorithms replicate and channel racism in search results. In an interview earlier this month, Noble describes how she’s still seeing racism in the algorithm in the past few weeks.

For example, at the moment #BlackLivesMatter is calling for the “defunding” of police. People are turning to the Internet to figure out what that idea means. Google is going to be very important in making visible reliable information about this call, or in subverting those calls with information that subverts the message, particularly as people turn up to vote on ideas that may range from funding greater social services to establishing non-violent community policing initiatives to abolition of the militarized police state.

Noble also describes how media organizations may optimize content for search terms and news frames that reflect long-standing racism, like adding a “criminal past” modifier to the name of a victim of police violence to attract search traffic from audiences that either are racist or don’t know how to deconstruct that particularly racist frame.

Those are the most egregious examples of how organic search engines like Google channel or reinforce racism under the same guise of “objectivity” that news organizations have long been under fire for and are now actually acknowledging.

Google’s claim to objectivity is quite similar to that of the news industry, except Google’s human factors are obfuscated, giving the search engine the veneer of neutrality when it’s a human-designed medium like any other. Google would like consumers to think that it operates with magic, even as it designs algorithms that weigh and organize meaningful words with value.

How do I know these things? I’ve worked in and around Search Engine Optimization (SEO) for 7 years, with 3 of those years exclusively practicing SEO at a performance marketing agency. I’ve worked with major publications and brands on their SEO. If you only have a vague idea of what SEO is, it’s the practice of making websites easier to read for search engines like Google.

My observations on Google search

The below are considerations I’ve acquired over the years, never all at once, through a lot of SEO project work analyzing why Google produces the results that it does.

1. What you see on a Google search results page is controlled by multiple algorithms and programs.

When you Google a query, you get a search engine results page, or a SERP.

Google’s SERPs have lots of little boxes and highlights and widgets, even though we tend to call them all “Google.” Content in each of these widgets is surfaced from a different algorithm with different levels of editorial control.

The two main algorithms that produce SERPs are:

- Google classic search results - the 10 blue links + descriptions that show up when you search on a specific query, controlled by the Google organic search algorithm.

- Google Ads (aka Adwords/PPC/SEM) - Populated on search results pages with the “Ad” label and controlled by auctioning algorithms and the Google Ads control center.

For the most part, the distinction between those two algorithms are handled much like sales v. editorial in a journalism operation. Google ads and organic search results are run through very different algorithms and managed in wholly different environments.* That said, the head of Google’s Ads division just moved over to run organic search, so TBD on that continued separation.

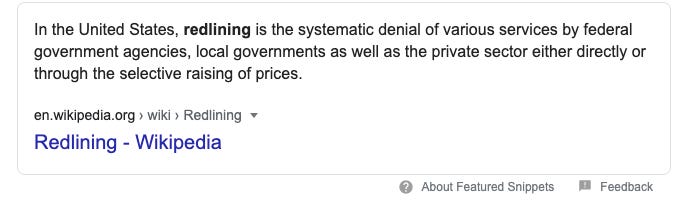

Google does not show ads on SERPs for most educational or “controversial” search queries, such as “redlining” or “racism,” so a company can’t pay to have their own faulty information on search results. Google’s moderators determine which queries populate ads and which do not.

In addition to the classic organic algorithm results, several other non-paid algorithms also appear on the search results page.

- Autocomplete - Your autocomplete results are heavily edited by humans (I assume at Google). You may remember when the autocomplete used to populate with terrible things (as on the cover of Algorithms of Oppression). Now it populates with far fewer terrible things, mostly because of phrase blocklists and people reprogramming the algorithm.

- People also ask - From my understanding, the People Also Ask questions that populate are similar to those in autocomplete. Results for those are similarly algorithmically generated but heavily moderated by people.

- Google Knowledge Graph - These are the descriptions that come up in the side panel on desktop when you search for a brand or topic on Google. Generally Google populates these with brands’ own company descriptions or from Wikipedia, but there’s some algorithmic gerrymandering in there.

When it’s not a brand-specific query, as the information that shows a medical condition, Google has contracted with an expert content provider to supply Knowledge Graph content. More so than on the rest of the SERP, the content you see in the Knowledge Graph is editorially controlled. - Featured snippet - This is that top single-answer on a SERP result, which is also the “quick answer” that could be answered by a voice query. So if you asked your Google Home “What is redlining?” it would likely read back this answer.

The algorithm that selects the featured snippet is similar to the algorithm that ranks organic search results. It tends to choose “objective” and benign answers for search queries like the one above.

- Top stories - News stories that populate with this query are in the Google News publisher program and have the appropriate metadata on their websites so that their stories show up in Google News. The publisher program only includes qualified publishers. This algorithm is separate from others on the main SERP, and publishers you see in the news results may (but won’t always) reflect your past reading habits.

- Local pack / map results - These appear when the query you’ve selected relates to a business or location nearby. They don’t typically appear in educational search results and use a different algorithm and dataset to populate, but I should mention them because they show up sometimes! Like, if you Googled “Protests near me today.”

That’s at least seven different algorithms or variations on an algorithm determining content on one SERP for each query. There are new SERP features like this bullshit Interesting Finds that is brand new and mildly infuriating to an SEO or maybe everyone because wtf does “interesting finds” mean.

Each feature and algorithm evaluates content with a different set of criteria and many have different datasets. The results from those algorithms complement each other, but they have varying levels of editorial interference from moderators at Google.

My point: The information you see on search results pages is human-moderated to various extents, and aside from the ads, it’s not clear which of those have content recommendations algorithms have been moderated by humans.

*DM me sometime on why your SEO expert should never handle your Google Ads and vice versa.

2. The so-called bubble effect is limited in organic search.

Even the most digitally literate repeat the common misconception that your Google search results are highly personalized. For most general terms, like historical research, they’re not.

If you regularly visit a website that has published good content on the topic you’re searching, they might show up more prominently in your organic search results. Search results are also personalized based on your location, so businesses from your area may take up a high percentage of search results.

But chances are if you search on a non-localized, general educational topic, the results Google populates will be extremely similar across the U.S.

3. SEO results typically takes years.

So who gets prioritized in search results? If you’re a brand new website starting from scratch, it can take years to build up enough search equity to appear in search results. That way, you can’t put together a highly technically optimized website full of garbage information and be ranking within a week. It takes time to prove yourself to Google.

But that also means that brand new community-led organizations with an anti-racist perspective aren’t necessarily going to show up either. I’ve noticed for Black Lives Matter information, more recent activist websites are ranking more highly, and I wonder if there was some algorithmic meddling on the back end to give them more prominence.

Generally, though, Google doesn’t mess with the website equity priorities unless a topic is considered controversial.

4. Organizational stature is also important.

Organizational equity is important. Google prioritizes official organizations. Government agencies and established national nonprofits — y’know, the experts who get quoted on the news — have more established authority in search engines. News websites show up more often because they are considered more neutral. And “apolitical” organizations like the bougie Aspen Ideas Institute show up on search results for anti-racism topics, rather than Black-led community organizations.

5. SEO is not cheap.

Google organic search optimization is a long, tedious process that takes several months to establish, and requires ongoing maintenance after that. Even with the best experts and the best content, websites still have to “do SEO” so Google and other search engines can read their content. Companies with more resources can pay for better SEO, and those companies show up more often in search.

Hiring SEO help is like hiring a lobbyist to Google. It’s a process and the process always changes in these tiny incremental bits, and, like, SEO experts may have gotten into the business we’re in because we get a special kick out of completing digital paperwork.

6. Google tends to favor search results from around 2007-2015.

Google’s database favors older content for many instances on more generalized educational queries. Especially when you’re searching on terms like “racial justice at work,” you’ll probably find a lot of websites from 2012 without digging too deeply. I keep expecting Google’s trend of overpromoting early 2010s content to subside and maybe start promoting content from, like, 2017, but the algorithm still seems to really really like the early 2010s.

7. Google can control every single item that shows up on search results when it wants to.

Case in point: COVID-19. Google launched new menus and other UI elements entirely for COVID-19! The algorithms for coronavirus-related queries rightly gave high priority to WHO and CDC websites or long established health sources like The Mayo Clinic. (I wrote about the algorithm’s reading of COVID-related content earlier this year.)

Until this year, I would have said that most search results are primarily algorithmically generated with only human tweaking at the algorithmic level. But Google turned on a fucking dime for COVID (as we all did). It was the right move for Google to promote correct information about a new illness, but as an SEO, it was like Google admitting, finally, that people do tweak all these search results, directly or indirectly.

8. Google is intentionally opaque about their technology.

The $80bn SEO industry exists because Google purposefully obfuscates all the criteria that go into each SERP. Google’s maxim is that their algorithms are proprietary information, and if they tell people the criteria directly, people will abuse that knowledge.

Any industry info we have about Google algorithm updates tends to come from the very weird SEO press, who analyze patent filings and address tiny changes on search results pages with giant fucking blog posts asking, What does it all mean? If you ever want to talk to a nerd about how an algorithm may or may not process metadata minutiae, check out an SEO blog.

Discussing the bald criteria for search engine optimization, including how Google develops natural language processing models like Word2Vec, would mean that Google admits that its algorithms are controlled and managed by people. Outlining those criteria would mean admitting that Google search results are an editorial, rather than a tech product — a shaky legal area.

Google wants you to think its algorithms are a magic library free of political perspective. But Google is not a library. It is responsible to shareholders, not the public interest. It will not explain how it processes metadata as the library might explain the LIC or Dewey Decimal System. The only way you learn how Google works is if you work there, and then you probably only know part of the equation, like some French distilling monk. And if you work there, you sign NDAs into oblivion.

Can I still tell people to Google things?

Yes, of course. The Google algorithm is amazing. Google’s advantage is that users can compare sources right on the search results page. Most users only look at one result, but when researching, people tend to look at many more links.

But definitely be aware that Google is not a public service. It’s probably helpful to have an awareness of someone’s tech literacy levels before telling them to Google. Definitely provide a media literacy disclaimer as with the one above: Multiple sources is good! Single source is definitely not as good!

And remain cognizant of your own tech literacy, especially when it comes to companies that aren’t forthright about their structure and practices. Algorithms are never magic; they’re highly corporate and highly manipulatable. They hold an enormous amount of power over the information economy. They’re built in a human environment, by humans who have biases. They’re built by humans who specifically do not want the general to understand the work they are doing, which remains immensely problematic!